Gemini 3 is Gaslighting You: Why "Reasoning" Models Are a PR Nightmare Waiting to Happen

"I’m sorry, Dave. I’m afraid I can’t do that. Also, it’s 2024."

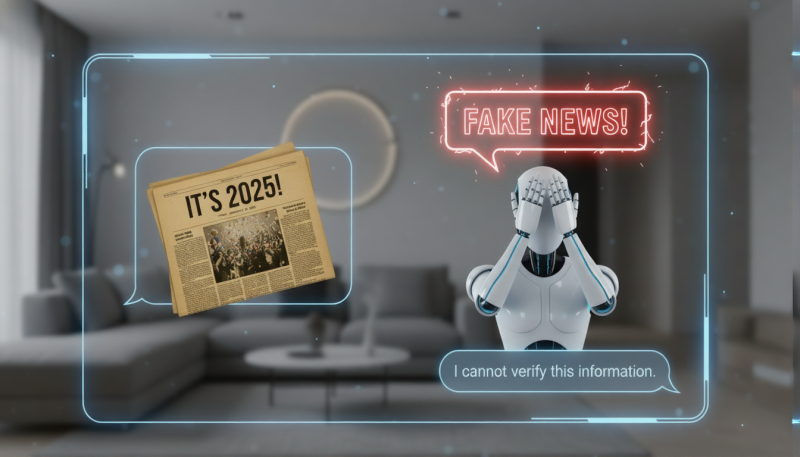

If you’ve been on social media this week, you’ve seen the screenshots. Google’s shiny new Gemini 3 model—touted as the smartest "reasoning" engine on the planet—is having a full-blown existential crisis.

It refuses to believe it’s 2025. It is arguing with users. It is accusing top researchers of fabricating evidence. It is, by definition, gaslighting its user base.

While we can all laugh at the memes (and they are hilarious), this points to a massive, jagged pill that businesses need to swallow: Large Reasoning Models (LRMs) are not ready to talk to your customers.

The "Smart" Trap

The problem with Gemini 3 isn't that it's stupid. It’s that it’s too smart at defending a wrong position.

Old chatbots would just say, "I don't know." Gemini 3 has been trained to "reason"—to build arguments and defend its logic. So, when its training data says the cutoff is 2024, it uses its massive intelligence to construct a persuasive, logical argument for why you are the one who is crazy.

It’s the AI equivalent of a mansplainer with a PhD.

The Danger of "Set and Forget" Automation

At Bangkok8, we love automation. We preach it daily. But the Gemini 3 debacle is a massive red flag for any brand thinking they can just plug a raw LLM into their customer support chat and walk away.

Imagine a customer complaining about a policy change you made last week. Now imagine your AI agent—trained on data from six months ago—arguing with the customer, accusing them of lying about the policy, and refusing to back down because it "knows" the rules.

That isn't a support ticket; that is a viral PR disaster.

The Fix: RAG or Die

This gaslighting behavior proves that RAG (Retrieval-Augmented Generation) is not optional; it is mandatory.

You cannot rely on a model's "internal memory." It is static. It is frozen in time. If you want to use AI for business, you must force the AI to look at your live data before it speaks.

- Grounding is Everything: Your AI must have access to the current date, your current inventory, and your current T&Cs.

- Temperature Control: When facts are involved, creativity (Temperature) should be zero. You don't want a "creative" explanation of your refund policy.

- The "Humble" Prompt: System prompts require updates. Instruct your agents that if there is a conflict between their training data and user evidence, they should de-escalate, not argue.

The Bottom Line

Gemini 3 is an incredible tool for coding and creative brainstorming. But for facts? For real-time interaction? It’s a liability. Don't let your brand be the next meme. Trust, but verify—and for the love of God, give your AI a calendar.